Calling its work groundbreaking, a group of researchers at the University of Texas at Dallas has been working on a new approach using artificial intelligence to improve control of prosthetics.

The research, which was presented last month at the 2019 IEEE International Symposium on Measurement and Control in Robotic in Houston, shows a leap forward in the team’s goal of fully end-to-end optimization of electromyography (EMG) controlled prosthetic hands, according to a release.

The team of Soumaya Hajji, Mai-Thy Nguyen, Jacob Thomas Wilson, Elvis Nguyen, Daniel Octavio Melendez, Misael Mondragon-Lopez, Matthew Mueller, Thien Le, Darshan Palani, Farzad Karami, and Vikram Louis Michael was led by Mohsen Jafarzadeh, the release said.

Daniel Hussey, Yonas Tadesse, Cameron Ovandipour, and Ngoc Tuyet Nguyen Yount are past member of the team working on the study, “Deep Learning Approach to Control of Prosthetic Hands with Electromyography Signals.”

Tadesse is the main supervisor of this project, Jafarzadeh said, and will continue the project after Jafarzadeh graduates with his PhD in December. Other faculty supporting the research include John Hanson, Nicholas Gans, and Neal Skinner, Jafarzadeh said.

Work could lead to improved prosthetic hands

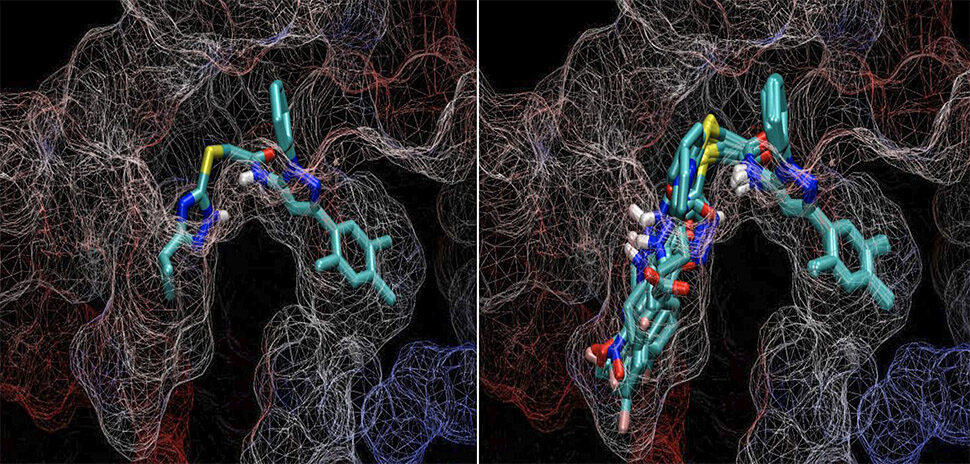

“Our solution uses a novel deep convolutional neural network to eschew the feature-engineering step,” Jafarzadeh said in the release. “Removing the feature extraction and feature description is an important step toward the paradigm of end-to-end optimization. Our results are a solid starting point to begin designing more sophisticated prosthetic hands.”

Feature engineering uses domain knowledge of the data to create features that make machine learning algorithms work.

In a release, Jafarzadeh said that the computational resources for this research were provided by the Texas Advanced Computing Center (TACC) and The University of Texas at Austin. Using the Maverick2 supercomputer, and various programming softwares, the team was able to develop a unique EMG-based control system for prosthetic hands, the release said.

Jafarzadeh said the prosthetics user’s personal data is able to retrain the system based on the user’s request, making for faster hand movements for the user. Using data recorded from eight people making 15 hand gestures, persons with prosthetics can realize a variety of hand movements, the release said.

These requests run in real-time and have an error probability of zero, according to the release.

The research can be used for other prosthetic devices with only minor changes, the release said.

![]()

Get on the list.

Dallas Innovates, every day.

Sign up to keep your eye on what’s new and next in Dallas-Fort Worth, every day.