Distracted driving is a major safety issue on U.S. highways, and Dallas-based EdgeTensor Technologies is bringing artificial intelligence technology out of the cloud and behind the steering wheel to monitor drivers. The technology, originally developed for autonomous vehicles, detects distracted drivers in real time and alerts the driver, according to the company.

To do so, EdgeTensor has developed a mass-market and low-cost edge computing-based AI software development kit (deep learning-based Face SDK) that uses any camera—such as the camera in a smartphone—to monitor drivers for distracted driving.

Distracted driving is a significant issue. Per the National Highway Traffic Safety Administration, 41 percent of all crashes are caused by distracted driving, and the Center for Disease Control and Prevention estimates nine people are killed each day in the U.S. due to distracted driving. And, even when combined with self-driving or semi-autonomous vehicles, it’s an issue.

MARKET OPPORTUNITY: DRIVER MONITORING SYSTEMS (DMS)

Pointing toward the value of a potential market for EdgeTensor, driver monitoring systems (DMS) technology looks to become a part of the next generation of intelligent vehicles. In fact, regulators in the EU are preparing for DMS to become a standard new car feature by 2020.

In terms of self driving and autonomous vehicles, the National Highway Traffic Safety Administration classifies autonomous vehicles in a system of levels, 0 through 5. In a nutshell, here are what each level means:

Level 0 — That would be you behind the wheel steering, operating the gas and the brake, just as you currently are.

Level 1 — Driver still controls most functions, but a specific function such as steering or acceleration can be done by the car automatically.

Level 2 — At least one driver assistance system is automated, such as cruise control and lane-centering. Driver must be alert to be ready to take control, though.

Level 3 — Drivers must be present in the vehicle and can divert “safety-critical functions” to the vehicle, but must intervene if necessary to ensure safety.

Level 4 — This is a “fully autonomous” level for vehicles that are “designed to perform all safety-critical driving functions and monitor roadway conditions for an entire trip.” It doesn’t cover all driving scenarios, however.

Level 5 — The fully autonomous system is expected to perform equal to that of a human driver in all driving situations, including extreme environments.

“Safety and security are the most important aspect in the current generation vehicles and the next generation (of) autonomous vehicles — and these can be solved with AI technology.”

Rajesh Narasimha

Level 3 (conditional automation) and Level 4 (high automation) autonomous vehicles require driver monitoring to alert drivers when they need to intervene, and even Level 5 (complete automation) vehicles require user authentication and in-vehicle monitoring to enhance the user experience, safety, and security.

“Safety and security are the most important aspect in the current generation vehicles and the next generation (of) autonomous vehicles — and these can be solved with AI technology,” EdgeTensor CEO and co-founder Rajesh Narasimha told Dallas Innovates.

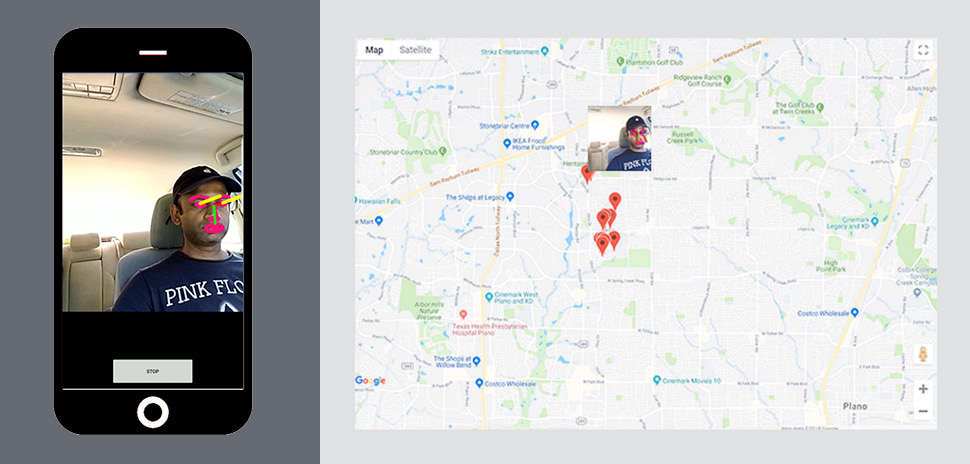

![An Edgetensor dashboard. [Courtesy of Edgetensor]](https://s24806.pcdn.co/wp-content/uploads/2018/09/Edgetensor02_SourceArt-Edgetensor.jpg)

An EdgeTensor dashboard. [Courtesy of EdgeTensor]

CTO/Co-founder Soumitry Ray

CEO/Co-founder Rajesh Narasimha

Narasimha founded the company in February with CTO and co-founder Soumitry Ray. Both were part of Metaio, a German AR startup acquired by Apple in 2015 that has since become Apple’s ARKit.

Between them, Narasimha and Ray share more than 75 patents and publications along with Ph.D.s from the Georgia Institute of Technology.

The company is headquartered in Dallas, where Narasimha and the sales and marketing team are based, while Ray works from an office in India with the engineering team.

FROM THE CLOUD TO THE EDGE

The “edge” in EdgeTensor comes from its use of “edge computing” to drive its technology. Simply put, an edge device is any that can perform computations locally without sending data to the cloud for processing and bypassing cloud computing roadblocks such as speed, latency, privacy, and reliability and safety concerns.

Narasimha described the smartphone as a great example of an Edge device that can run AI algorithms and only sends event-driven information to the cloud.

“Today, the cost of storing data securely, uploading and downloading content and the temperate controlled facility to keep the data is significant and edge computing alleviates these issues.”

Soumitry Ray

“Another important aspect of Edge computing that usually does not get highlighted is how the amount of data sent to the cloud and power consumed to store and process this data can be optimized, said Ray. “Today, the cost of storing data securely, uploading and downloading content and the temperate controlled facility to keep the data is significant and edge computing alleviates these issues.”

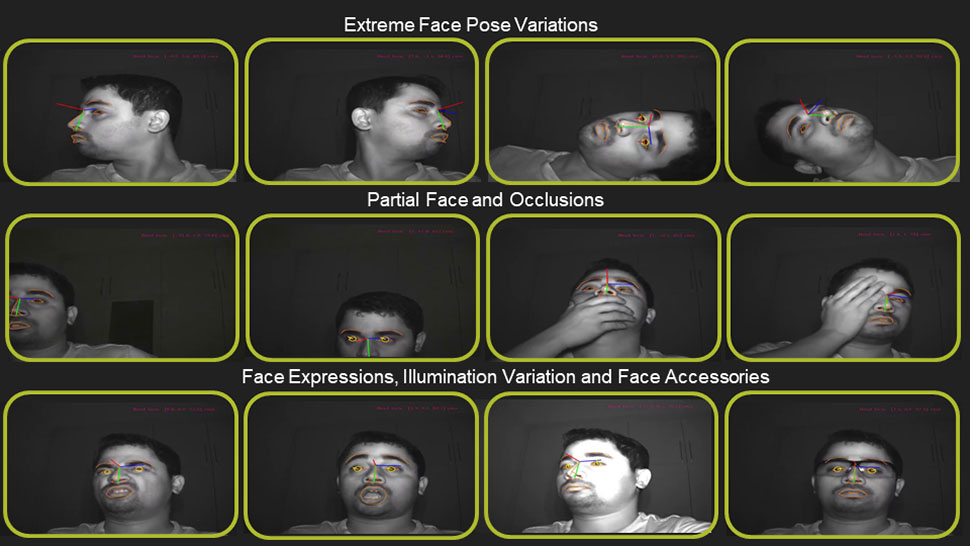

What sets EdgeTensor apart from its driver-monitoring competition is its proprietary AI inference engine that uses deep learning algorithms optimized for low-cost computing devices. The required hardware running at 30 to 40 frames per second can be found in low-priced smartphones. EdgeTensor’s solution works with a wide range of cameras, making it compatible with a range of devices.

In action, EdgeTensor’s technology tracks facial analytics such as gaze and head pose, prompting drivers with audible alerts, vibrating the steering wheel, or vibrating seats to alert for distracted driving. The combination of the mobile app and EdgeTensor’s cloud portal creates a history so managers can proactively identify problematic roadways or caution drivers who are observed using mobile phones for texting or other activities instead of watching the road.

A dashboard includes a GPS-enabled map interface to pinpoint where distractions occurred along with the corresponding evidence via images and video clips.

EDGETENSOR MEETING A GROWING NEED

EdgeTensor’s tech comes at a time where there is a general recognition of the need for driver monitoring systems in a variety of situations, including monitoring safety drivers testing Level 4 automation vehicles, such as Uber vehicles in Phoenix, said Thomas Bamonte, Senior Program Manager, Automated Vehicles, North Central Texas Council of Governments.

Bamonte also pointed to drivers in current Level 2 and Level 3 vehicles and even non-automated vehicles, where distractions like texting are endemic of areas in need of driver monitoring technology.

“North Texas has a large and growing role in the automated vehicle ecosystem.”

Thomas Bamonte

“If EdgeTensor can deliver a driver monitoring product using off-the-shelf computing (e.g., smartphone) at an attractive price, it might get significant traction in the consumer and delivery business markets,” said Bamonte.

EdgeTensor isn’t the only area company active in the autonomous vehicle space, according to Bamonte. “North Texas has a large and growing role in the automated vehicle ecosystem,” he said.

“For example, TI is active in the area of Advanced Driver Assistance Systems, which are the building blocks for semi-automated and ultimately fully automated vehicles,” said Bamonte. “Ericsson and AT&T are just two of a number of North Texas companies working on next-generation communications systems (e.g., 5G) that will be used for vehicle-to-vehicle and vehicle-to-infrastructure communications.”

EdgeTensor is working with tier one automotive companies as well as truck, bus, and taxi fleet companies according to Narasimha, and is fielding requests from usage-based insurance companies, app-based rideshare companies and telemetrics and mapping services companies to integrate its Face SDK for various applications.

Its Face SDK handles a number of functions, including:

- Face tracking with occlusion reasoning

- Head-pose tracking and location

- Gaze direction and location

- Age and gender

- Face recognition

- Face identification/verification

- Emotion recognition

And beyond facial tracking, the SDK can also be used for human detection and pose tracking, vehicle detection and identification, and license plate recognition. This means the tech has applications beyond distracted driving, such as enhancing security in public places like schools, airports, stadiums, and hospitals as well as applications in robotics, smart cities, digital signage, and marketing.

“We believe EdgeTensor’s AI technology can save lives, keep businesses and public places safer, and help retailers provide the right shopping experience,” Narasimha said.

WATCH:

Dallas-based startup EdgeTensor’s driver monitoring system

![]()

Get on the list.

Dallas Innovates, every day.

Sign up to keep your eye on what’s new and next in Dallas-Fort Worth, every day.