Dallas-based global semiconductor giant Texas Instruments today announced a new family of six Arm Cortex-based vision processors that allow designers to add more vision and AI processing at a lower cost, and with better energy efficiency, in applications such as video doorbells, machine vision, and autonomous mobile robots.

The company said the processors will build on innovations that advance intelligence at the edge.

“In order to achieve real-time responsiveness in the electronics that keep our world moving, decision-making needs to happen locally and with better power efficiency,” Sameer Wasson, VP of processors at Texas Instruments, said in a statement. “This new processor family of affordable, highly integrated SoCs will enable the future of embedded AI by allowing for more cameras and vision processing in edge applications.”

TI said the new family, which includes the AM62A, AM68A and AM69A processors, is supported by open-source evaluation and model development tools, and common software that is programmable through industry-standard application programming interfaces, frameworks, and models.

The platform of vision processors, software, and tools helps designers easily develop and scale edge AI designs across multiple systems while accelerating time to market, TI said.

TI said its new vision processors bring intelligence from the cloud to the real world by eliminating cost and design complexity barriers when implementing vision processing and deep learning capabilities in low-power edge AI applications.

Next-level AI applications

These processors feature a system-on-a-chip architecture that includes extensive integration.

Integrated components include Arm Cortex-A53 or Cortex-A72 central processing units, a third-generation TI image signal processor, internal memory, interfaces, and hardware accelerators that deliver from 1 to 32 teraoperations per second of AI processing for deep learning algorithms.

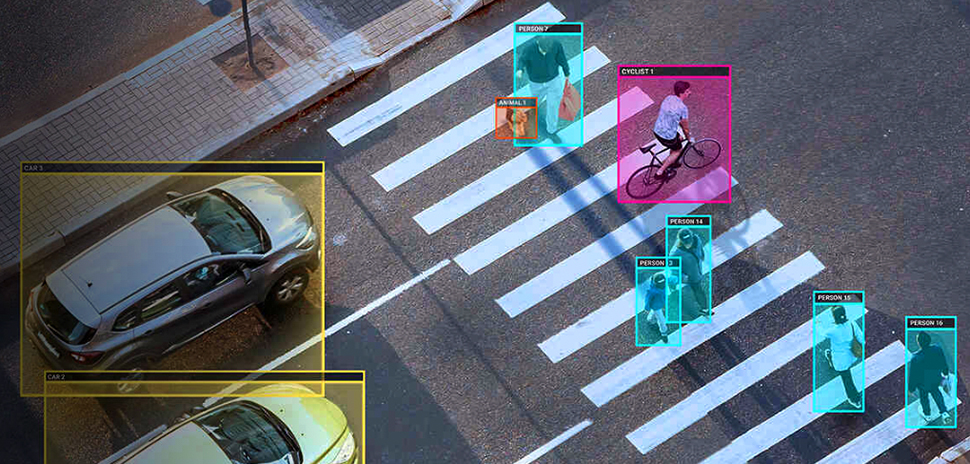

One of the chips, the AM69A, achieves 32 TOPS of AI processing for one to 12 cameras in high-performance applications such as edge AI boxes, autonomous mobile robots, and traffic monitoring systems.

Beginning in the second quarter this year, TI said designers can accelerate time to market for their edge AI applications with a public beta of TI’s free open-source tool, Edge AI Studio.

TI calls it a feature-rich, web-based tool that allows users to develop and test AI models quickly and easily with user-created models and TI’s optimized models, which can also be retrained with custom data.

![]()

Get on the list.

Dallas Innovates, every day.

Sign up to keep your eye on what’s new and next in Dallas-Fort Worth, every day.