Southern Methodist University researchers and Northwestern University are collaborating on a powerful new technology that lets cameras see through nearly anything—including people. A groundbreaking holographic camera has been developed that can record high-resolution images and holograms of objects that are hidden around corners or obscured from view.

That means drivers could avoid accidents around blind corners and soldiers could “see” behind walls. Researchers say the applications for defense, hazard identification, and medical use are broad. Examples include early-warning navigation systems for cars and industrial inspections like jet engines for fatigue. In medicine, it could replace colonoscopies by seeing inside the intestines.

Called Synthetic Wavelength Holography, the tech transforms real-world surfaces into “illumination and imaging portals,” SMU said in a news release. AI is used to reconstruct hidden objects that are indirectly illuminated by light scattered onto them. That means images can be captured through fog, and faces could be identified around corners, the researchers detailed in a recent study published in Nature Communications.

DARPA project

SMU Lyle School researchers started working on the imaging technology in 2016. Part of a multi-million dollar effort funded by the Defense Advanced Research Projects Agency, SMU Lyle has been awarded $5.06 million by DARPA as part of the school’s REVEAL program.

SMU dubbed the overall project OMNISCIENT, which focuses on a “light-based see-through-wall/see-around-corners x-ray-vision-type technology.” The OMNISCIENT/REVEAL project brings together researchers in the fields of computational imaging, computer vision, signal processing, information theory, and computer graphics. SMU leads the overall effort and collaborates with fellow engineers at Rice, Northwestern, Carnegie Mellon, and Harvard University.

In 2016, Marc Christensen, dean of the Bobby B. Lyle School of Engineering at SMU and principal investigator for the DARPA project, said its work would allow the school to build a holographic 3-D of something that is out of view.

“Your eyes can’t do that,” Christensen said at the time. “It doesn’t mean we can’t do that.”

SMU’s Marc Christensen

Today, Christensen says he’s pleased with the progress. “We’re confident our work in this area will lead to new inventions and approaches across several industries as technology continues to evolve and improve how we capture and interpret various wavelengths of light, both seen and unseen. It’s another example of the Lyle School’s commitment to creating new economic opportunities while meeting the most difficult challenges facing society,” he said in a statement.

Christensen is joined by co-principal investigators Duncan MacFarlan, associate dean for Engineering Entrepreneurship, Bobby B. Lyle Centennial Chair in Engineering Entrepreneurship, and professor of electrical engineering, and Prasanna Rangarajan, assistant professor of electrical and computer engineering.

How it works

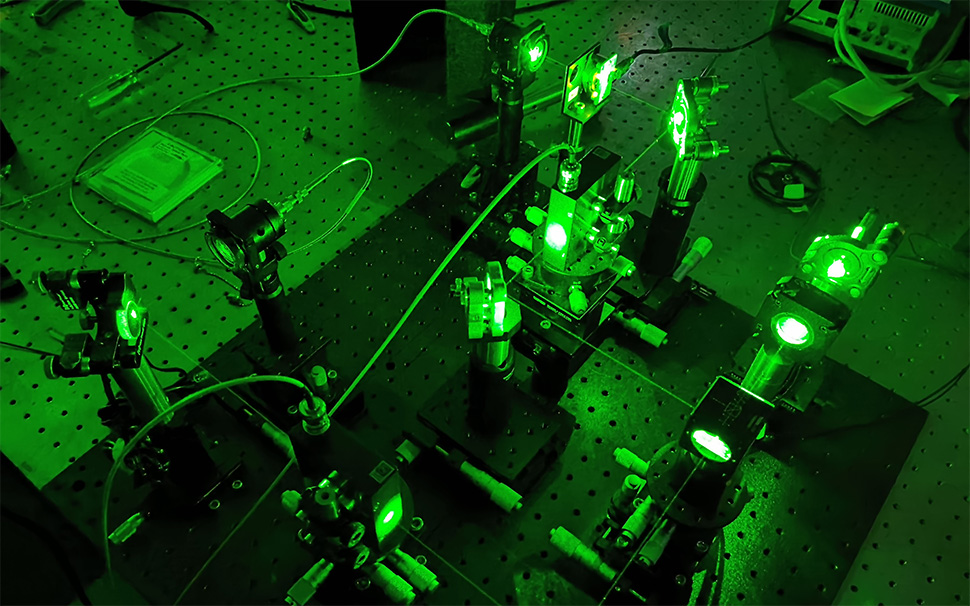

Parts of the new technology were developed in SMU’s Photonics Architecture Lab, which is led by Rangarajan, who is second author on the study. He says that the scientific principles that underlay the recent study have a striking resemblance to the human perception of beat notes. Beat notes are a periodic variation in sound level resulting from the interaction of two closely spaced tones or audio frequencies, he explains.

“By combining laser light of two closely spaced colors, we synthesize an optical beat note, which bounces off obscured objects,” Rangarajan said in a statement. “Monitoring the relative change in the phase of the transmitted and received optical beat note allows us to locate hidden objects (echolocation) and assemble a hologram of the hidden objects.”

Rangarajan’s group teamed with Northwestern researchers to develop Non Line of Site (NLoS) cameras based on Synthetic Wavelength Holography.

The study’s first author, Northwestern researcher Florian Willomitzer, says the current sensor prototypes use visible (or invisible) infrared light, but because the principle is universal that could be extended to other wavelengths. “For example, the same method could be applied to radio waves for space exploration or underwater acoustic imaging. It can be applied to many areas, and we have only scratched the surface,” he said in a statement.

Murali Balaji, a doctoral candidate in SMU Lyle School and co-author of the study, sees another extension.

“It may soon be possible to build cameras that not only see through scattering media, but also sniff out trace chemicals within the scattering medium. The technology relies on photo-mixers to convert optical beats into physical waves at the TeraHertz Synthetic Wavelength,” Balaji said in a statement.

Complementary innovation

SMU says the new tech complements a previous innovation by its team. Last year, the group partnered with Rice University to demonstrate NLoS cameras with “the highest resolving power.” Combining insights from Cold War-era lensless imaging techniques with modern deep learning techniques resolved tiny features on hidden objects, SMU says.

![]()

Get on the list.

Dallas Innovates, every day.

Sign up to keep your eye on what’s new and next in Dallas-Fort Worth, every day.