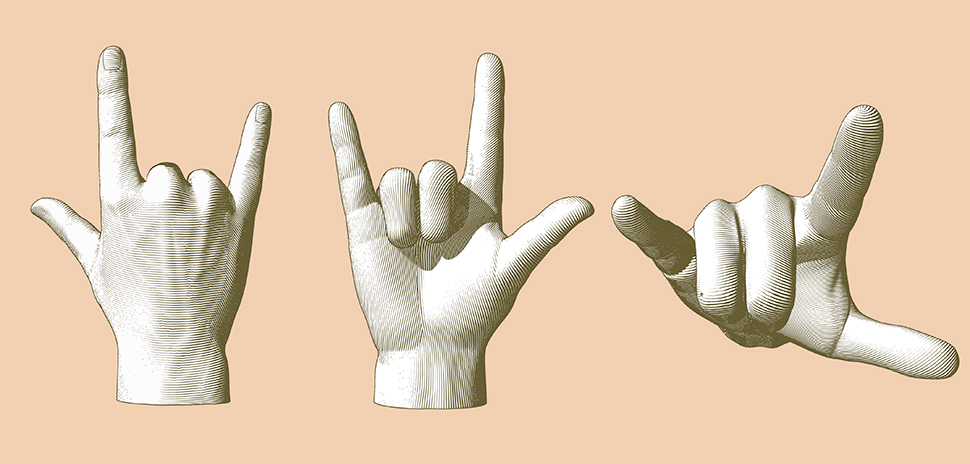

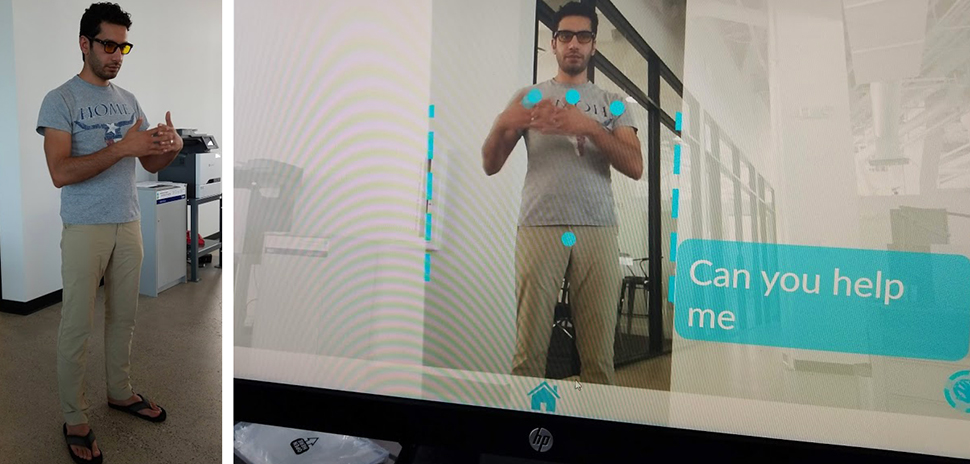

Mohamed Elwazer quickly signs phrases in front of an Xbox Kinect camera, introducing himself and asking for help. He ends each sentence by putting his hands at his sides.

Almost instantly, KinTrans technology translates Elwazer’s body movements into text and voice, allowing everyone to understand what he’s saying. In some cases, a person could even talk back to the computer, and an avatar would use sign language to translate it back.

This sign language translation technology could revolutionize the lives of deaf people, especially as it moves to mobile devices and turns any cell phone or tablet into a sign language translator.

“We designed for the deaf community first and what it gave us is this robust engine that can do so many more.”

Catherine Bentley

Elwazer and his wife, Catherine Bentley, started KinTrans to improve the lives of deaf and mute people—but the benefits go far beyond that.

“We were inspired by the challenges of recognizing sign language,” Bentley said. “That’s how we got to this technology that can translate sign language and voice to text but it can also do so many other things. We designed for the deaf community first and what it gave us is this robust engine that can do so much more.”

Mohamed Elwazer quickly signs phrases in front of an Xbox Kinect camera. [Photo: Courtesy of KinTrans]

The same technology could be applied to virtual reality, augmented reality corporate training, data visualization and manipulation, building security, home automation, and even gaming.

The opportunities are endless as Bentley believes natural human body movement and voice will replace clicks and keyboards in the future. And, the potential for that technology is largely untapped.

“Technology is not going to be driven by our clicks or through devices on thousands of apps. It’s going to be much more streamlined based on our profile as a human being,” she said. “Technology will be able to intuitively make the decisions that we do manually now, it’s going to intuitively know what we need when we need it by using voice recognition, video, and movement. All of this is going to come together someday.”

“Technology is not going to be driven by our clicks or through devices on thousands of apps. It’s going to be much more streamlined based on our profile as a human being.”

Catherine Bentley

KinTrans recently moved from Austin to Capital Factory+The DEC’s new location on Oak Lawn Avenue in Dallas.

The startup was selected to participate in the Microsoft Extreme Labs program, where it will be assigned three or four engineers who will build a new interface for virtual and augmented reality.

It’s a senior capstone project for students at Southern Methodist University. Once the API is completed, they will make it public so people can build their own programs with it.

“We can watch and see what people try to do with it,” Bentley said.

KinTrans has mostly been self-funded, but did receive a $100,000 grant from the organizers of the next World Expo to be held in Dubai in 2020.

THE USES FOR KINTRANS

KinTrans has already launched pilot programs around the world in bus stations and other places where people can interact with an avatar that works like a sign language bot. KinTrans technology can be found as far away as Australia and the United Arab Emirates and as close as Austin and Washington D.C.

The tech is capable of understanding signs in multiple languages and different dialects thanks to Elwazer, who speaks and signs multiple languages.

“Widespread adoption of VR hasn’t happened not only because of cost, but because of value.”

Catherine Bentley

Beyond that, there’s potential for this technology to speed up the adoption of virtual and augmented reality. KinTrans means users would be able to interact with a digital world without controllers in their hands. New mobile devices, including the iPhone X, come with 3D adaptive cameras that can sense depth and body motion. KinTrans embraces that technology and combines it with its own software that understands human motion.

“Widespread adoption of VR hasn’t happened not only because of cost but because of value,” Bentley said. “Why should I spend $2,000 on this whole set-up and then my games when I’m still using clunky devices? We’re delivering this intuitive experience so the value proposition goes up and more adoptions happened.”

Employees could work on virtual engines or other training scenarios.

Taking things a step further, if KinTrans combines its technology with recent advancements in haptic technology, users could feel and touch things that aren’t there and interact with them, such as a tool for a training scenario or a weapon in a shooting game.

CREATING IMMERSIVE EXPERIENCES

“Now that we have movement recognition and we have haptics, we can really have immersive experiences in the gaming world or the corporate world,” Bentley said.

Data visualization could be another huge market, as executives or keynote speakers present information in VR/AR that can be manipulated or moved around with the swipe of a hand.

Security firms could use the depth-sensing cameras to watch for unusual or suspicious body movement, then use artificial intelligence to assess outliers and send an alert. That means security guards wouldn’t have to watch hundreds of different screens to find the one threat.

“Nobody else is translating sign language and nobody understands how big that market is.”

Catherine Bentley

For home automation, the cameras could sense when someone comes home, opens the garage door, turns on the lights, puts the television on a favorite show, or turns the music on automatically. Hop into bed, and all the lights go out and doors lock for the night.

Interior designers could use it to virtually move furniture around the house, knock down walls or try new cabinets with a few hand movements.

KinTrans will continue advancing these other use cases while focusing on its mission to help deaf and mute people communicate instantly.

“Nobody else is translating sign language, and nobody understands how big that market is,” Bentley said.

VIDEO

![]()

Get on the list.

Dallas Innovates, every day.

Sign up to keep your eye on what’s new and next in Dallas-Fort Worth, every day.